Technical and electrical aspects of neurofeedback training.

Design Components and Settings

Oscilloscope and Spectrum Analyzer

The oscilloscope and spectrum analyzer are just displays. They have nothing to do with the training, just how the overall signal is portrayed on the screen.

Tone Generators

There have always been Tone generators free standing (the binaural beat always playing e.g. alpha theta), others linked in a conditional way to thresholds or AND objects (beats sounding only when the brain is out of the target range) and others linked to frequency activity so they track the peak frequency and change beat rates to “herd” the brain’s activity in a specific direction. For example the beta percent up takes peak frequency and compares it to 14 Hz. If above, it subtracts 2 Hz from the peak and sets the beat rate there (19 Hz peak would result in a 17 Hz beat). If below, it adds 1 or 2 Hz from the peak and sets the beat rate there (e.g. 4Hz peak would result in a 6 Hz beat.)

Thresholds

All the 2 and 4-channel multiband coherence designs have both auto (on Instruments 1–the client page) and manual (on Instruments 2 –trainer page) thresholds. If either is in scoring range, there is feedback. The trainer shouldn’t need to do anything but watch. Each band will be passing at least 80% of the time–more if they are below the fixed/manual target. With 4 bands, at least 40% of the time all will be in pass range at the same time, and the client will hear the deep tone as the video begins to move. The more the client stays in the target range, the more this happens (up to 100%).

There is always feedback activity in the chord, some notes sounding. It’s easy enough to increase the feedback rates. In each of the auto thresholds, click in the button area beneath the bar graph. The button shows the percent of time the thresholds will be met. Each click will raise the automatic target by 1%. 90% reward pretty much assures that the minimum time with video and the deep tone will be around 65%.

BPS

The bps reading is bits per second, a measure of the rate at which the software transforms the analog (waveform) to a digital (numeric) reading. Higher bps rates relate to greater resolution and finer detail, always at a trade-off though. 12 or 16 bits are generally considered to be quite acceptable by the engineers with whom I’ve spoken. 8 is an acceptable lower end.

MIDI Sounds

MIDI sounds have 12 tones (8 white notes and 4 black notes on a piano) per octave. 60 is middle C on the piano, so 48 would be the C below that, 72 would be the C above it, etc. You can choose any key and find the root notes in that key (like the C in the key of C). If C is 60, C-sharp is 61, D is 62, E-flat is 63, E is 64 (the third in the key of C), F is 64, F-sharp is 65, G is 66 (the 5th in the key of C), etc. To make a major chord in C, you would set one threshold to play 60, another to play 64 and the third to play 66.

If you want to create a chord in another key, then you need to know the 3 or 4 notes that make up that chord and find them on the MIDI scale. For example, in the key of F, your root would be 64, your third would be 68 and your fifth would be 70.

Obviously major and minor seventh chords would add a fourth note, harmonic and melodic minor chords would usually drop the value of one of the notes by one step on the MIDI scale. If you want to use a Blues scale or Pentatonic or any of the other options, you’ll need to know enough music theory to identify the appropriate notes in those.

Another very nice option is to play a melody line with the pitch of the note being played dependent on where in the range of training values the current reading is. For example, if theta is varying in a range between 10 and 22 microvolts (a range of 12), and you set your note range to 60 to 72 (also a range of 12), you can set the software to raise the note one step for each microvolt the signal increases–or to decrease the pitch one note for each microvolt. The melody literally becomes the feedback.

DVD Feedback

You can use brightness and volume controls on the DVD to avoid the choppiness of starting and stopping all the time instead of the Play/Pause when you connect to Enable on the DVD player. Using a Yes/No output to inform the Brightness or Volume (or both) controls. Then you set the input values (on the DVD Brightness properties, for example, to 0 and 1 and the brightness levels to something like 400m and 1. You can set a smoothing/averaging for this to something like 125m.

The other option is to set your thresholds higher, so the client is scoring 80-85% in the beginning. As he improves, his scoring should go up to 90 or higher and the brain will be rewarded” with very smooth playback of the DVD. NF is brain shaping, not brain disciplining.

How to change window size for DVD player

From the instruments menu choose edit layout.

Resize the windows as you would in any other Windows file. Be careful not to click the X and close the window, which removes it from the design.

Changing DVD Reward Types

Setting up the volume control instead of start-stop control for CD or DVD play will require you to change the Signal Diagram. You might want to hold off on that until you get a little more comfortable with the basics of the program.

Copying and Pasting Among Designs

There is no way to copy and paste among signal diagrams.

Bandpass Filters

The bandpass filters simply define the frequency to be passed. They are like the Frequency Bands button in BrainMaster, which does not say anything about the protocol.

The threshold objects HAVE to have a definition of whether the signal is to be rewarded or inhibited. If you go to the Objects menu and choose any of the Thresholds on the list, you’ll open the Properties window for that threshold. On that window, in the Threshold Tab, the bottom of the three check boxes is “Increase”. If that box is checked, then the signal is rewarded above the threshold; if it is NOT checked, the signal is inhibited to stay below the threshold. There’s no option other than those two. Also, if you look on the Instruments1 window, there are six Threshold Bar Graphs. Each one has, in its second-to-bottom line below the graph, either Decrease or Increase.

You can open the Properties window for any of the Bandpass filters and/or the Threshold objects in the Objects menu, or by double-clicking it on the Signal Diagram page, or by right-clicking (the Thresholds) on the Instruments1 page. This will allow you to change the desired training range or filter type or order for the Bandpass filters; it will allow you to change various elements on the Thresholds as well.

Ratio Output

The Ratio output from the BioExplorer threshold reports the ratio of the actual value divided by the target value. So, for example, if the target is set for 10 microvolts and the actual reading is 12 microvolts, the ratio output would give 1.2 or 120% of the target. Using a standard Pass/Fail output might, for example, make PacMan move or stop moving, but whether the client was barely over or WAY over the target, PacMan would move at the same speed. Using a Ratio output, PacMan could move faster or slower, depending on how far above the target the brain was.

All this would work fine if you were training to increase something. But often we are training to decrease a frequency’s activity. For example, same target of 10 uV, the client is barely under it at 9 (9/10=90%) or WAY under it at 5 (5/10=50%), would result in the PacMan actually going SLOWER when the client was doing better. In that case, you might choose to Reverse the ratio output. By doing so, the 50% (5/10) would be changed to 200% (10/5), and the feedback would make more sense.

Rewards and Inhibits

The general rule, for me, is to minimize the number of rewards and inhibits in a session, simply because of the difficulty of providing good feedback. With one threshold for the brain to pass, you can set a target to provide 80% feedback and have a pretty good chance of doing so. When you add a second training parameter, you have the potential, even setting both at 80%, to reduce feedback to about 65% (if all the 20% of non-feedback on each target occurs during the 80% of feedback on the other, the feedback occurs .8 time .8 or 64%). Adding a third target at 80% potentially reduces feedback to50% (.8*.8*.8).

The inhibit threshold should ideally shape the brain’s activity toward cutting off the highest bursts of the target activity (outliers). If we are talking about theta as our inhibit, then we want to get rid of the most excessive theta spikes, which will reduce the average theta level and reduce variance as well. Of course, if the threshold is too responsive, it really won’t perform that task. Whenever theta spikes, the target will follow it up quickly and come back down more slowly, so the brain may actually be getting positive feedback exactly when it is doing what we want it to stop doing. Therefore, I like to use a longer epoch (generally 30-60 seconds) for my inhibit thresholds. If there is a true trending up or down in theta, the threshold will follow, but it won’t be affected by spiking.

The reward threshold has a different problem. In a great majority of clients, there will be an overall reduction in amplitudes in response to training preceding clinical improvement. Theta amplitudes will come down and (gasp) beta amplitudes will as well. In most cases, the inhibited activity will fall further than the rewarded activity, resulting in an improvement in the ratio/relationship between the two types of activity, and that’s what we want to see. But if we have recalcitrant thresholds (manual or automatic) on the reward frequency, it’s perfectly possible–in fact quite likely–that when the brain quiets, with the inhibited frequency dropping significantly and the ratio improving, the rewarded frequency will also drop, and the brain will receive no positive feedback when it’s doing exactly what we want it to do. So I would use a shorter epoch, maybe 15 seconds, on that threshold.

As for target percents, here there is a real range of opinions. My best advice here is to say that the answer depends on the client and the training task. When I began working with brains, I followed Lubar’s model which rewarded about 40-50% of the time. Clients expected that because that’s what they experienced from the very beginning, and I really never had any complaints about such a lean reinforcement schedule. After I trained with the Othmers, I tried the 70-80% reward level, and it did seem to work better with more wound-up clients–worse with more internal ones. Very anxious clients tend not to let go and relax into the training when reward levels are low.

So here is what I do when I must (or choose to) use contingent feedback (beeps that come only when all the training requirements are met): I start with auto thresholds with epochs set as stated above. After a minute or two, when the brain starts to settle in, I switch the inhibit threshold to manual. Now the client’s brain has a fixed target against which to exercise itself. The better it does, the higher its scoring percent can become. But the reward frequency can trend down with the inhibit frequency, and the auto threshold allows it to continue to score.

But let me suggest two other options that are possible with BioExplorer.

1. You can, with BE, combine continuous feedback AND contingent feedback in the same protocol. A tone, which rises and falls in pitch and/or volume can be playing all the time, giving the brain information about what it is doing. And, I can set a specific target which, when met, results in some additional feedback. Because that’s not the only sound, I like to set the auto-threshold for that band to give 10-20% reward, so it is heard only when the brain is doing its very best. A number of the BE protocols in my package do exactly that, and they seem to work very well.

2. You can also skip the whole question of setting targets on rewards and inhibits by using either percent or ratio trainings. For example, instead of setting a threshold on theta and another on beta, just set up a power ratio of theta divided by beta and train for that number to give down. That simplifies things for you and for the client.

Feedback Delays

I try to keep the feedback latency to below 250ms. Feedback latency is the delay between the moment something happens in the brain and the time the brain receives feedback regarding that event. Obviously the shorter the delay, the better the brain can learn from the feedback.

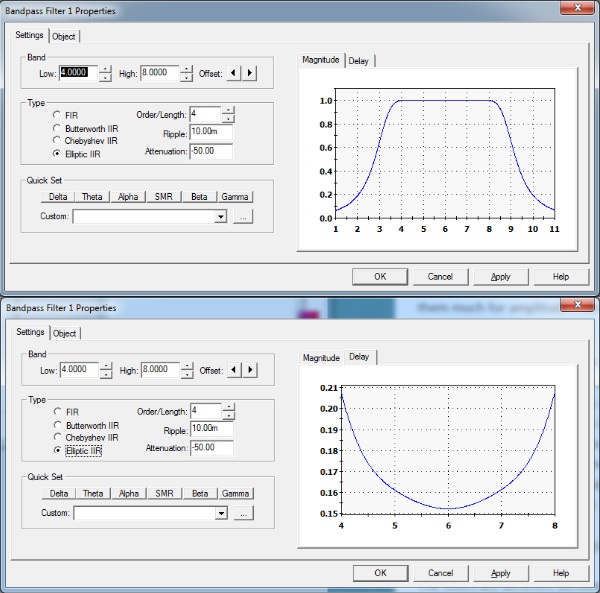

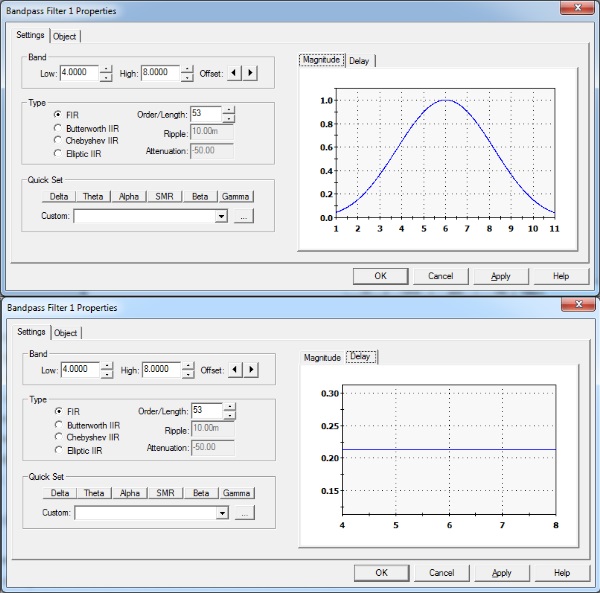

There are four elements in the main feedback channel where delay can enter: Source, bandpass filter, threshold and feedback device.

As a general rule, the largest delay is unavoidably in the Bandpass Filters, since you are always trading between the filter’s accuracy and its speed. In the Filter Properties window you can compare the graph showing Magnitude (accuracy) and the graph showing Delay. You can reduce the filter order and filter type in order to find the best combination of accuracy and speed. It’s worth noting that the wider the band you are filtering, the faster AND more accurate the filter will be. Try setting a filter with a width of 3 Hz (e.g. 12-15) and change the filter order to get the delay below 200 ms. Then change the training band to 6 Hz (e.g. 15-21 Hz) and you’ll see that you can increase the filter order, thus improving filter accuracy, and probably still end up with a shorter delay.

Thresholds are a potential source of delay–and also can be set to produce almost zero delay. If you use an average period (settings) other than 0.0, that value is added to the feedback delay. Some people like to set these to 125 ms or even higher to “smooth out” the look of the bar graph display, but (as is clearly stated in the Help file) that delay is directly added to feedback latency. So even if you have a bandpass filter delay of, say, 200 ms, an average period of 125ms on the threshold will bump the total delay up to 325ms. I prefer to leave this at zero, and if you want the client to watch the bar graph and find a zero delay to make it too jumpy, use a Bar Graph object on the client screen. You can put an average/smoothing delay in a Bar Graph, and it won’t have any effect on true feedback, because it is just a display–not in the main feedback channel.

Epoch is the number of seconds BE uses to calculate the Auto threshold. If it is 30.0, then BE will take the average of the actual data from the past 30 seconds and figure out where the target scoring threshold would be (e.g. where would the target be for the client to have been scoring, say, 75% during the past 30 seconds. That doesn’t slow down feedback at all. 30-40 seconds is a good value here, since it keeps the target from responding too immediately to bounces in brain activity.

Duration is a little trickier. It is the period of time that the client must STAY in the target range in order to receive feedback. Let’s imagine that you set that to 1.0 (1 second.) The client holds theta down and beta up relative to his targets–holds it for .85 seconds–during which time he gets NO information telling the brain that it is doing exactly what we want it to do. And if he then pops above the threshold for 10 ms, he has to start all over again. He NEVER finds out that he was doing what we wanted. If we set the Duration at 250ms, then the client must stay in the training range for 1/4 second before he finds out he’s in the range. You could make a pretty good argument that you have added 250ms to the feedback latency.

On the other hand, if the brain is rewarded ever time it pops over the threshold for a millisecond, you may not be training it to maintain stability. In reality, most of the time you use a higher duration you have to set the target lower in order to let the client score a reasonable amount. So the question is: “would you rather teach the brain to stay above a low threshold for a longer time or bounce above a higher threshold even for a short time?

One way you can provide the brain with both kinds of information is to give it double feedback (you’ll find this in a number of the brain-trainer designs). You can give immediate feedback with Duration 0.0, using something like a MIDI sound that in controlled by a zero duration threshold–or which has nothing at all coming into the Enable button. In other words, you are providing Continuous Feedback by changing the pitch or volume (or both) of the sound. Then you give Contingent feedback (all requirements must be met at the same time) with something like the Score object and set your duration higher. The brain is always getting almost immediate information about what it is doing, and it is also getting information about how long it is doing it.

About Filters

If you look at the filter graphs in your training software, compare an IIR filter, like an Elliptic, and an FIR filter. You’ll see that the IIR filter tends to focus on producing a stable response across the whole frequency band of the filter, but the time response changes throughout the filter band.

Look at an FIR filter, and you’ll see that the delay graph is a straight line at a specific number of milliseconds and it is the same all the way across the band being filtered; on the other hand, the frequency response tends to be quite center-loaded: very accurate in the middle of the band but falling off fairly sharply toward the edges.

In other words, with an Elliptic IIR filter, amplitude activity will be reported for a 4-8 Hz filter almost 100% from every frequency from 4 to 8 Hz, and activity outside that band will be reported at a much lower percent or zero. But at 4Hz, let’s say, there might be a delay of 130 milliseconds; at 4.5 Hz a delay of 150 ms; at 5 Hz a delay of 180ms, etc. With the FIR filter the delay would be exactly the same at 4 Hz, 4.5, 5, etc. But only at 6 Hz, the middle of the band, would the amount of amplitude be 100% accurately reported. And by the time you got to 4 Hz or 8 Hz, the edges of the band, only 60% of it might be reported

The Butterworth filter is a center-loaded filter which loses accuracy at the edges. If you look at the Magnitude graph to the right of your Filters Properties box, you’ll see a graph of the filter’s response curve. On, for example, a 12-16 Hz filter, the 3rd order Butterworth will have a nice smooth top running directly on the 1.0 line, but it won’t go all the way from 12 to 16 Hz (the numbers along the bottom of the graph.) In fact it starts to “roll off” at around 13Hz and 15Hz. The higher the filter order, the more complete coverage the filter will have, but there is a trade-off: Check the Delay tab and you’ll see that as you increase the number in the Order box, the delay added by the filter goes up pretty sharply.

The Chebyshev and Elliptic are almost exactly the same. The FIR provides some different characteristics, as does the Butterworth. The more you get into signal processing and using it in training, the more you recognize that each has its benefits. I would only use FIR for coherence, phase or synchrony, and I’ve learned that many clients prefer the “softer” Butterworth for rewards, while the Elliptic works well for inhibits. Also with some bandwidths, a Butterworth will provide a faster response than an Elliptic. For example, those who want to try to train down in the 0-0.10 Hz band more recently popularized by the Othmers, a Butterworth is really the only option. Its delay, even as a first-order filter, is over a second, but it does have a nice little peak right in the center of the band, and the Elliptic just can’t do it at any speed.

The Chebyshev and Elliptic filters tend to be better at covering the whole band, though they have a ripple along the top, and they are often faster than the Butterworths. If you recall from the BioExplorer workshop, we went through different options of filter type and order and looked at the curve and delay in each. It’s a painful experience of trade-off. But here’s the real clinker: clients sometimes experience the sharper filters as being a bit “harsh”, while the more center-loaded filters are experienced as being “gentler”.

The FIR is not a frequency-based filter as much as a time-based. Look at the difference in the graphs, and you’ll see the delay is exactly the same all the way across the frequency band on an FIR, where it varies with the IIR (Infinite Impulse Response) filters. They aren’t used much in training in anything I’ve seen to date.

I usually use Elliptic filters for inhibits (e.g. 2-9.5 Hz), as high an order as I can get without passing 200 ms delay, and Butterworth filters (e.g. 10-14 Hz) with the order set to keep the delay about the same as that for the inhibit filters. With the Butterworths, because they are so center-loaded, I often change the frequency band to get the center/peak around 10 Hz or a little above.

The FIR filter should always be used for coherence or synchrony training. The timing issues make this critical. If you look at the Delay tab on the graphs to the right of the Bandpass Filter properties window, you’ll see, as you switch through the filter types, that delay is variable for different frequencies for Butterworth, Chebyshev and Elliptic filters. The FIR filter shows a straight line across the graph, registering exactly the same delay for each frequency. Since coherence is based on phase, and phase is based on timing relationships, trying to train coherence (or synchrony, which is coherence in phase) with a filter that introduces timing differences at different frequencies is a problem. I don’t use them much for amplitude training, because I find that the IIR’s are faster and more accurate.

Training to increase a frequency is usually done in brain-trainer Designs using Butterworth filters, while those in the assessment generally use Elliptic. The Butterworth “rolls off” within the training band. If you look at the graph of the filter in the Properties window of the Filter object, you’ll notice that a filter picking up 12-15 Hz doesn’t stay at 100% (1.0) all the way from 12-15. The edges of the filter “roll off” before the defined edges. The elliptic filter rolls off OUTSIDE the band defined or, as in the assessment, we use a high enough filter order that the edges drop straight down, so they pick up 100% of the defined band and 0% of the rest.

Assessment vs Training Filters

The filters are different between the assessment and training files, because in the assessment there is no feedback, so delay is not an issue. We use the most accurate filters to give the clearest possible view of the frequency bands.

BioExplorer and Low Frequencies

There is no way I can see to set frequency bands in BioExplorer below 0.0 to 0.1 (which means a central frequency of 0.05 or one pulse every 20 seconds!) The software won’t accept a filter that filters 0.0 to 0.01 (central frequency of 0.005 or 1/200th second).

At 0-0.1 Hz as a reward band (which BE does just fine), you have some problems caused by the physics of digital signal processing:

1. An elliptic filter, 1st order, which only produces a delay of 150 ms, isn’t very accurate. In fact, it passes 200% of the signal around the area they want to measure but 100% of all activity up as high as nearly 2 Hz. Not exactly training 0-0.1 Hz. Raising the filter order to 2 provides a little more specific and accurate filter–but the time delay goes up around 1.5 seconds and above.

2. The Butterworth filter the Othmers usually use, in its fastest form (1st order) does provide a fairly accurate reading of the band they want to train, but the delay ranges from about 1/2 second to about 2.25 seconds. In other words, in order to actually SEE the frequency they say they are training, they have to set up the filters so they literally give the feedback between one and a half and two and a half seconds AFTER the even happens in the brain. That’s the equivalent of trying to train a puppy by giving him a treat about 10 minutes after he does something you tell him to do.

I leave it to you to imagine what kind of accuracy and delay are provided by asking a filter to measure a band that is 2/100 of a Hz (the above are 50/100 Hz wide).

Sampling Rate

The sampling rate is the number of times each second the amplifier grabs a sample of brain activity and brings it to the software. It shouldn’t affect the frequency (Hz) or amplitude (microvolts) at all, except that perhaps in very high frequencies a very low sampling rate might show less detail or, in extreme cases, cause a problem called aliasing.

In electrical engineering there is the Nyquist Sampling Theorem, which states that a filter can only be accurate up to a frequency of less than one-half its sampling rate. In other words, a machine that sampled at 64 Hz (there aren’t any) should theoretically be fine up to give an accurate digital picture of frequencies up to a little below 32 Hz. A 128 sampling rate should be fine for digitizing waves of frequencies up to nearly 64 Hz. A 256 sampling rate should allow accurate digitizing of a signal up to just below 128 Hz.

For the less technically-minded, I use an analogy to discuss sampling: Two people are placed by a window and told to count the ants crawling across it. One is allowed to see the window a certain number of times per second. The other is shown the window twice as often. The bigger and slower the ants, the more likely the two counters will come up with the same count. When the ants are smaller and faster, however, it is likely that the person who only looks half as often will miss some.

For EEG training the difference between 256 bps and 2064 bps is meaningless. You are training signals that complete a full cycle between 1 second (very slow delta) and 1/40th of a second. Even at 256 samples per second, you would have more than 6 samples for each waveform at 40 Hz. At 2048 (which might be useful for training EMG, where you might be looking at signals up to 1/500th of a second), there would be 48 readings for every waveform of 1/40th second.

All EEG amplifiers work at 256 (at least the more recent ones), even though some may oversample at 1024 or 2048. Those then “down-sample” to 256, so it’s kind of like buying a digital camera that shoots 8 megapixel images and then putting them on the internet at a resolution of less than 1 megapixel. You have really gained nothing by it.

According to the Nyquist Theorem of signal processing, the sampling rate must be at least two times the highest frequency to be sampled to assure an accurate signal. Of course, if you want to sample up to 56 Hz, that would mean that sampling would have to be at least 112 Hz, so 120 would be acceptable. The standard for EEG for many years now has been 256. When you see amps trumpeting their sampling rates of 512 or 1024 or even 2048, that’s marketing hype. Those rates are potentially valuable (though still probably overkill) for EMG training, which might go up to 100 or 200 Hz or more, so a 512 rate (the top one offered by the Pendant) is plenty fast enough. For EEG, use 256.