Tips for Conducting Better Assessments

See Tech Support for information on how to do brain maps. This section is to provide tips for gathering better data.

In the TQ guide we recommend that assessments be recorded before 11am.

The assessment gives the trainer a starting place. I know what NOT to aim at, and have some ideas as to where and what seems to be a “bad energy habit” that might be resulting in states and behaviors that the client would like to change. But with all that, I can’t with any degree of certainty say which protocol is going to make the change until I’ve tried them and seen how the client (not necessarily the EEG) responded.

Activation Tasks for Special Situations

Electrodes and Electrode Placement

Activation Tasks for Special Situations

Tasks for a Nonverbal Client

Assuming that by non-verbal you mean not speaking or writing–not that the client is unable to understand verbal material, I’ve used the following:

Digit span: holding up a series of fingers, like catcher’s signals, and having client repeat them

Reading: I’ve used a set of words on a page, speaking one and asking the client to point to it; if that’s not feasible, do what comes to you. The central strip is not really a reading area, so you can do any general activation task.

Calculation: adapt the serial calculation using blocks and a number-board for answers, etc.

Listening: again, I would tell a story and then ask the client to point to pictures or numbers to answer questions about it.

Visual task: where’s Waldo is one I’ve used with lots of kids, or the puzzles in Highlights.

Remember that it is more what the brain is doing during the task that is important than how well the client performs on the test.

Assessing a Blind Client

I’ve done an assessment of a person with seriously compromised vision, but he could still see light and vague shapes. I have absolutely no idea what to expect in terms of alpha blocking from eyes closed to eyes open tasks. It is presumed to be the data coming into the visual cortex that causes alpha to block, and in a completely blind person, that obviously would not happen. That could either result in no increase in alpha with eyes open (because the client is always aware using those senses) or no blocking of alpha when they are opened.

As for the tasks, you could substitute any language task for the Central strip reading (e.g. reading aloud to her), but the idea of reading with braille is a good one too. Regardless of how the information is coming in, the language areas should be activated. The pattern recognition (midline) task will be a much harder one. Here again, I guess you could ask her to find certain word or letter combinations, but we won’t really be seeing the visual cortex activate for that in any case.

Assessing a Client with Significant Brain Damage

Time to drag out your creativity glasses.

I’ve done several assessments with folks whose brains were seriously compromised. Usually we’ve been able to find tasks somewhat in the same range as the ones in the assessment. I’ve had people listen to a digit or two, then show the numbers of fingers or point at numbers on a page of the 10 digits to “repeat” them, do simple one-digit additions and point to answers, etc.

If they can’t do any of this type of thing, then I would simply have someone stand in their field of vision and talk to them, explaining something and gesturing–anything to try to see if the brain can activate (and how it does so) when a cognitive task is required. You might be surprised.

Certainly it’s possible to just do the heads page and gather just two minutes of data to see what happens, but it’s worth a little effort to get the third, if possible.

Assessing a Very Young Child

Generally I wouldn’t even try to do an assessment on a 5-year-old. Their ability to sit quietly even for 15-20 minutes is very limited. Usually I would just have the parents complete the Client Report. You can load that into the TQ8 without loading any EEG data, and it will still give you a plan. If the child is very calm, go ahead and do the assessment, but just do EC and EO, and maybe skip the 5th step of the assessment, so you only do the first four, which will give you the info you need.

Artifacting

Let’s clarify just for a moment the concept of artifacting.

When we do an assessment, we need to see the client’s brain data as completely and accurately as possible with as little as possible of non-brain electrical activity as possible leaking in. As you probably know, when recording toward the front of the head (though it can appear as far back as the parietals if a client is wearing contact lenses) the electrical signals produced by each eyeball and the muscles that blink and move the eyes appear as slow-wave activity in the EEG, and they are so much larger than brain signals that they can swamp the EEG reading. Especially around the temporal lobes, the masseter muscles used for chewing (and often a location where people hold tension) among others in the neck, face and head, can also produce strong electrical signals that appear as fast-wave activity in the EEG.

One of the most important things to understand is that the best artifacting is done while RECORDING the assessment. In order to have a reasonable chance of getting good brain information, we need at least 30 seconds of data (50% of the 60 seconds recorded for each minute). While it’s possible to remove some kinds of encapsulated artifact (like an eye blink or a movement), muscular tension or electromagnetic interference can be a constant presence which is impossible to remove. With practice you can recognize eye artifacts by looking at the power spectrum or the oscilloscope. A surge of all frequencies in both channels that raises amplitudes in frequencies below 6 Hz is almost certainly artifact. Oscilloscope tracings show a large wave form that looks like an “S” tipped on its side. A sharp surge in ALL frequencies–especially visible above 20 Hz or so–can indicate bracing or clenching of muscles in the power spectrum, and the oscilloscope shows very condensed and sharp waves.

There are also graphs on the trainer’s window to help identify fast or slow artifact bursts.

Getting the client to sit comfortably, with both feet flat on the floor (use a little footstool for short legs so they don’t hang) is very important. If necessary, do a little relaxation with the client before starting the recording. I usually ask the client to let his mouth hang slightly open so he doesn’t clench his teeth. When recording frontal sites with EO or at task, have the client keep his head up but look down at the floor in front of his feet. This keeps the eyes partially closed so they don’t try out quickly, and blinks tend to be less constant and very small. Most of the tasks can be done this way as well.

Each eye blink disrupts the signal for 2-4 seconds, and it’s not uncommon for people to blink 15-20 times a second. It’s crucial, if you want to get a good assessment, to minimize blinking and eye movement effects. While some people blink or shift their eyes a lot even with them closed, most do fine with EC. Asking the client to very lightly place the tip of the index finger on each eyelid during the EC recording can help in cases where uncontrolled EC eye movements are problem.

One last point: If I say that the best artifacting is done during recording, and if the auto-artifacting can usually do the most necessary cleaning when there isn’t major artifact, why bother to have the manual override that lets you change targets?

There are conditions like hot temporals or hot cingulate where fastwave activity is well out of normal range in an area; or white-matter head injuries with spikes of delta. Auto artifacting will screen these out as artifact–and letting that happen will reduce the accuracy and usefulness of the assessment. Adjusting the thresholds is critical, and that’s why you have that option.

No artifact in the record; no important data excluded from the record. That’s the goal.

Deciding which Data to Include or Exclude

Artifacting is a bit of an art, so it can seem confusing, but it’s also pretty common sense, which can make it quite simple.

The goal is to get as much useful information about the brain INTO the assessment without distorting it by including non-brain activity. The assessment shows you the maps for EC, EO and Task recordings, which ones passed (green)–meaning more than 30 seconds of good data were available, which ones didn’t and why. If the assessment is all green, which many are–and more as you get more experienced in recording–just accept it. If not, either reject it out of hand (obviously artifact) or look into and make a decision.

First Rule: If the heads are all blotched with colors, only 50 or 60% green, you either have a bad recording or a very difficult client. If the recording was bad, apologize and record again–better this time. If the client was bad, try some HEG and/or calming EEG protocols for 10 sessions. (May not even need to assess).

The best way to avoid artifact is not to record it. Watch the power spectrum to verify that the signals are symmetric. When they aren’t, re-prep the sites and try re-recording to see if the two sides are more alike. (Midline sites compare front with back of brain, so they may not be as symmetrical.) Verify that you are recording accurate brain patterns, then record.

Most often there will be a few blocks of color. Commonly they will be areas like F7/F8, F3/F4, Fp1/Fp2, sometimes Fz/Pz. Generally frontal sites, generally EO or at Task show eyeblink or eye-movement artifact in slow frequencies when the trainer and client don’t maintain focus on minimizing eye activity during recording. In most cases, I would agree with the program and exclude them.

Second Rule: Task data is nice, but it’s not strongly considered in training planning. Get in as much EC and EO data as possible without distorting the data.

Each site pair’s row beneath the graphs shows the percent of each state (EC/EO/TSK) that passed. Any value below 50% means that data won’t get into the assessment. If the pass percent is 47% or 39%, I’ll probably take a look at whether to revisit that ranking. If 47% is passing, then we only need 3% more to be able to use the data. 3% of 60 seconds is 1.8, so if I can change just 2 of the non-pass values to pass, we’ll be able to include those sites.

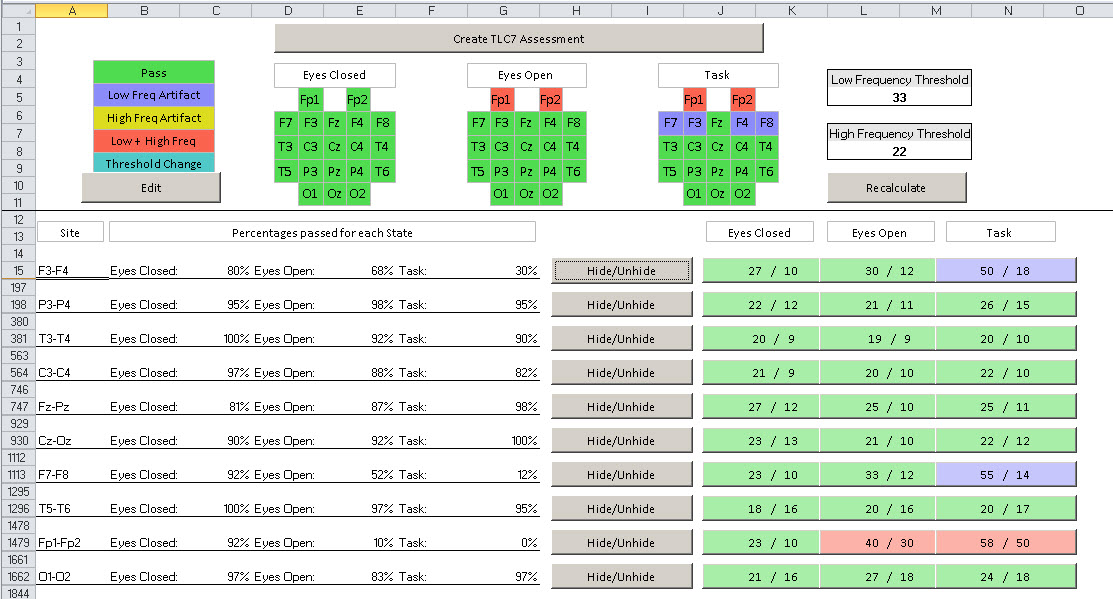

Clicking on the Hide/Unhide button, I can see the actual values for each second–the artifact in red. As I scan down the red numbers in the Slow column, I may see that the software set the threshold to 27u, and I find 3-4 values in the range from 27.1 to 30u. It’s doubtful that these are artifact–they’ll have a minor effect on the reported levels–and their addition will strengthen the completeness of the assessment.

The buttons to the right in each site pair’s row show the minimum artifact thresholds that would allow the site to pass. You can easily compare them against the thresholds in use (listed in the upper right). If my slow cutoff is set at 33, and I see that raising it to 39 would allow a site to pass, I’ll likely change it for the whole sheet to 39 (Recalculate).

But if I see that I’d have to change the cutoff to 48, that’s a much bigger jump. I might look deeper into the data, but in most cases I’d just exclude the site.

Artifacting Example

Our goal in the first phase of the assessment process is to get the clearest, most accurate, highest-resolution image of the brain’s activation patterns we can. That means removing as much artifact as we can. It also means NOT removing data that is likely to be good and important because we decide it’s artifact.

Hot temporal lobes or a hot cingulate or a delta spike related to a head injury can all cause significant false positives, tricking the auto-artifacting system. But those things, if they are NOT artifact, are important patterns in the brain we will want to train. Not allowing them in will reduce the effectiveness of the assessment.

When you look at the artifacting page, you see the selected threshold numbers for low frequency artifact (often related to eyeblinks, eye movement, cable movement, etc) and high frequency artifact (tension or movement). If you click on the Hide/Unhide button on any of the site-sets, you’ll see, in the first column, a list of epochs. 0-1 second, 1-2 seconds, etc. up to 179-180. The second column (Low Frequency) contains the sum of 2-4 Hz and 4-6 Hz activity in that second for all 4 sites. The third column (High Frequency) does the same for 23-38 and 38-42 Hz.

As you scroll down the list, you’ll see some rows red, others black.

Red rows are recommended for rejection as artifact based on the calculation of the spreadsheet. The number in the Low or High frequency column (or sometimes both) will be bold-faced, indicating that THIS was the number which exceeded the threshold and removed the data.

As you read across the top like with the sites identified, you can see the percent of sites that passed (neither slow nor fast frequency thresholds were exceeded in that second). I’m looking at a file in front of me, and I see a slow threshold of 33 (microvolts). The first rejected data line I see is because of a slow frequency vale of 35. Do I really think that second is artifact? I doubt it. It just got caught a little on the wrong side of the line.

Is it likely that either removing or including that line, with a value so close to the expected values will make a change in the final data–the average of all accepted sites? Very unlikely.

Now I scroll down further and suddenly I find a stretch of 9 seconds where the value goes from 37 all the way up by 79, with several reading in the mid-40’s and mid-50’s before ending with a 37. That sounds like a numerical representation of what I see on the power spectrum while recording an eye blink–all the slow frequencies surge out together and return together. Adding those values into the calculation of the average amplitude will make a noticeable difference, because there are a number of them and they are all well above the amplitudes before and after. I’ll leave them out.

You can go to that level of detail if it interests you, but there is also a quick and easy way on the artifacting page itself. Two numbers: the percent of seconds that passed in the area in question, and the “pass targets” listed to the right of each row.

If 43% passed, then I’d need to find another 7% (7%*60 seconds=4.2) 5 seconds of data that could be passed reasonably. If 14% passed, then I’ll need to find 23 seconds–a harder job. So the closer the pass % is to 50%, the easier it is to try to get in the data.

If the selected Thresholds are, say 33 for slow and 33 for fast, and the pass% numbers in the blue button not passing because of slow artifact are 50/18, then I would have to increase my low-frequency threshold by 50% (from 33-50). That’s a VERY big adjustment, and it would have a major effect on the values loaded into the assessment.

So now I have to ask myself whether there is a valid reason either for keeping the data out or adjusting to get it in.

I notice that 3 of the 4 sites not passing are Task recordings, and they are at F3/F4, F7/F8 and Fp1/Fp2–all very far frontal sites. The fourth site not passing is Fp1/Fp2 Eyes open. Eyes closed, all these sites look fine. Eyes open are okay except in the site closest to the eyes. Clients have the hardest time remembering their eye position when they are asked to perform a task (especially when, as at F7/F8 and Fp1/Fp2, the task involves using the eyes). It’s a pretty easy decision for me not to stretch the limits by 50% in order to pass data that I’m pretty positive are artifact.

But what if T3 and T4 aren’t passing because of high-frequency signals suspected of being artifact. If I know that when I recorded the sites I made sure the client let his mouth hang open–so there’s no bracing of the jaw muscles–and I noticed in watching the recording that there was significant fast activity, then I’m not so confident it’s artifact. After all, we have a category called “hot temporals” where these two sites (and/or with F7/F8 or T5/T6) have more fast activation than other brain sites.

If I see that I would have to raise the threshold from, say, 33 to 42 in order to pass it at least EC and EO data, I’d probably do it. I might also use the Hide/Unhide button to scroll down the data. If I find it runs within a range, maybe from 34-39u consistently, again, that doesn’t look like artifact. There’s no surging, no on/off. It’s just a more activated area.

There are some key assumptions built into the auto-artifacting procss:

1. That one set of targets for slow and fast activity (where artifacts are most likely to appear as surges in amplitude) can work for the whole brain. We tried setting thresholds for each site, but turned out badly. Key: remember that all the sites are compared against the same value, so a special site may show high levels of artifact which is really EEG.

2. Those targets are based on EC and EO parietal and central sites–areas where artifact is not easily produced. Obviously if recordings are bad at those sites, the targets may not be very useful, but if you can’t get good recordings in central and parietal, the whole assessment may be in question.

Blinking

Anything you do as a task will likely have eyes open and will likely involve moving them and probably will include blinking.

If you watch the replay of the recording, watch the power spectrum for surges where all slow activities go out and come back at once. In the oscilloscope, watch for big excursions in the baseline that look like an “S” tipped on its side.

Over-Artifacting

In the early-to-mid 90’s, when I was just starting off with an A620 amp, I was learning a lot from Joel & Judith Lubar, who were about 90 minutes away in Knoxville. We were using a 1C amp and software had been created for artifacting, which I was learning to use. Joel had showed it to me a month or so before, and he was in the office, sitting behind me as I started trying to look at each 2-second epoch and decide if it had artifact or not. After about 2 minutes of watching me obsess, Joel stood up to go, suggesting: Go through this one time as carefully as you can. Make sure you get every bit of artifact. Then go through it again and just take out the obvious stuff. See how they compare. I did so, and there was almost no difference at all. Serious muscle artifact or eye movement artifact make a clear excursion in the waveforms (or the power spectral displays. Just remove those and you’ll end up with a very good signal pretty quickly.

Slow and Fast-Wave Artifact

I worry about slow (eye movement/cable movement) and fast (muscle bracing or movements) artifacts. And I worry a lot more about those which are built in to the signal. If you can get a client to physically relax and be still for one minute at a time, you can remove artifact when there is a movement or tensing. But if the client is tense throughout, there’s no way to remove it. You get good recordings when you are recording–not when you are artifacting. If you are having to watch interocular tracings and EMG tracings, etc to see if the client is tense or blinking, you can’t be watching the one thing you really SHOULD be watching: the client.

Threshold Changes for One Site or All

In most cases I prefer to use the same thresholds for all sites. However, if I see something like hot temporals, I may change only for the temporal lobes. Very rarely would I change just for one site and one condition.

Collection Timing

You can’t just record one set of sites a few days after recording the rest and slip them into the assessment. The brain changes hourly at least in terms of activation levels, etc. The closer together the sites are recorded, the better.

Electrodes and Electrode Placement

Some brain trainers use the saline cap we recommend. Some use electrodes of various types. Some still use gel caps. Regardless of what you choose to use, it is never a good idea to mix electrodes–certainly not metals. Ideally use electrodes that are all about the same age and degree of use to minimize offset.

Ground

The function of the ground electrode is to help avoid overloading the amplifier if you should have a static discharge or some other powerful signal. The ground gives the signal a way to get off the body other than through the amplifier’s circuits. It is also used as a comparison for the active(s) and reference(s). The amplifier uses “common-mode rejection”–canceling out any signal that appears in all sites–as a way of removing background noise.

The ground can be placed anywhere on the client’s body, but the quality of the connection will be affected by the quality of the connection of the ground (as with all other electrodes). Ideally the ground would be on a site equidistant from the two active sites, but it’s not critical. It can be anywhere on the head, back of the neck, behind an ear or on the earlobe or even (with certain systems) on the client’s wrist.

References

One of the basic rules is that, if you are using an ear reference, place it on the same side as your head lead. Thus C4/A2 is correct and C3/A1 is correct.

Good Signals

You should be able to see if you have a trainable signal or not with a quick look at the oscilloscope and power spectrum. With modern amplifiers, the old “standard” of below 10 kohms for training, below 5 for research is essentially meaningless. This means that if you have a newer amplifier, a separate impedance meter is unnecessary.

I learned long ago that lead placement is technique, technique, technique. If you are using individual electrodes, prep each spot lightly for 10 seconds, put a mound of paste over the electrode head and make sure it is in contact at least some of it with scalp, and impedances are consistently low. The real benefit of the impedance meter that I used in the past was that it measured offset between electrodes. There again, if you work with groups of electrodes that were purchased at the same time, same type and same material, it is super-rare to run into offset issues.

Bald heads have tougher skin, so I usually prep more than usual. You don’t have to shave a bare spot on the head to get a good connection. I’ve worked with a few clients with remarkably thick (densely populated) hair where it was difficult to get to the scalp, but even with those it was not a major issue.

If you have extremely dense hair, where you simply can’t spread it away from a spot of scalp and get a space of clear scalp that is visible, using combs or bobby pins or such to part the hair away from the site will usually help hold it away. If you can get the scalp visible, a small dab of Ten/20 paste lightly rubbed into the scalp at that site will usually help give good connections AND help hold the hair. I go for a “starburst” pattern if I do that, so the hair spreads away from the open area in all directions. Then use a blob of paste that fills the electrode surface or cup and mounds up so that one could fill a second electrode facing the opposite direction with the mound. Place the tip of the mound on that small area of clear scalp and lightly wiggle the electrode down and into place so it is sitting on a pad of paste.

More paste shouldn’t give you better but worse connections. When you have giant globs of paste in the client’s hair, you are creating a lovely little antenna to pick up signals from the environment.

Many people do prefer the saline system for a couple of reasons. First, it is often used without prepping, though I would recommend prepping even using this system. Second, you are pushing (actually holding with the head apparatus) the edge of an electrode encased in some kind of material that keeps it wet with saline solution against the scalp. A much smaller bit of scalp will work. Third, the saline solution, like any water, flows through hair and makes contact with the scalp, so getting a good conductive path from scalp to electrode is easier.

An electrode does pick up activity from a circle with an area of about 6 cm2 with the center of the electrode as the center of a circle, because the bone tends to “smear” the electrical signal a bit (it’s not a very good conductor of electricity). And in a monopolar (referential) or bipolar (sequential) montage, you are indeed measuring between the two (active and reference) electrodes.

But what is it we are measuring? It’s not amps (flow of current) but voltage (the difference in electrical potential that “drives” electrons from the more activated to the less activated site.) You can’t have voltage at one site. Voltage is a comparison value between two sites. But it’s not exactly measured AT the two sites. It’s measured BETWEEN them. If you could stand at the Active electrode and look toward the reference electrode, and at the same time could stand at the reference and look toward the active electrode, you would only see about 30% of the EEG signals between them: those that were primarily lined up between the electrodes and fairly close to one or the other electrodes.

So, it does matter where you put the reference. If you measure from Cz to A1, you’ll be looking over the left hemisphere; if you change your reference to A2, you’ll be looking over the right hemisphere. And remember that, although we SAY that the earlobes are inert sites, we know that, in fact, each does have a signal that is picked up from the field emanating from the temporal lobe. Since the temporals are often quite different in signal, the ears also can be quite different.

Repeating the Assessment

Should results change after training

Sure. If brains were neat mechanistic systems (as psychology assumes) rather than complex chaotic systems, that would work great. We need to understand that the “normative” databases used in population-based QEEG’s–and hence in most of the research that has produced the patterns we identify–are based on the assumption that brain measures produce what statistics calls normal distributions. But chaos theory tells us that this is not the case in chaotic systems. Each such system is completely unique, self-referential and self-reinforcing, dependent on its initial conditions. In short, EVERY chaotic system is perfectly normal given the conditions that led to its formation.

“A complex system is a system composed of many components which may interact with each other. In many cases it is useful to represent such a system as a network where the nodes represent the components and the links their interactions.” Sounds a lot like a brain, doesn’t it–or an ecology or weather system or economy–all systems which are notoriously difficult to “model” and control (at least if you expect your models to produce estimates that are anywhere near the actual results of the system.

The idea of whole-brain training is not that if we can just change a variable or two by feeding back information about it, the brain will neatly make a change in that variable and BINGO, we’ve succeeded. Rather we use the assessment to identify variables that are correlated with systems that demonstrate certain tendencies (e.g. anxiety, internal focus or peak performance). Hopefully we all understand that correlation does not equal causation. In other words, a fast right-rear quadrant doesn’t “cause” anxiety or terminal insomnia–nor do they cause a brain to have a fast right-rear quadrant. They often co-exist, but likely both are related to other factors, some perhaps we cannot even measure.

Our goal is not to identify the levers to use to make the brain do more what we want it to. Rather it is to disturb the brain’s habit patterns in multiple ways by applying feedback–the main driver of complex chaotic systems. Ideally these multiple nudges result in the whole system (the homeostasis) shifting in a desired direction and establishing a new stable range of operation. Whether that means that all the measures we trained will change or not is problematic.

My position has been since my early days trying to convince people to let me put wires on their heads and mess around with electricity (before neurofeedback was something anyone had heard of) I don’t care if the Theta/Beta ratio changes or not. I care if the client can pay attention more effectively. And in most cases, unless there is an engineer or accountant parent, the client couldn’t care less about the ratio if the teacher or boss or spouse stops complaining, or if work can be done faster and more easily. Of course “scientists” and left-hemisphere thinkers who cannot accept experience as meaningful MUST have something to measure, choosing to ignore the famous dictum: Not everything that can be counted counts. Not everything that counts can be counted.

Signal Issues

There are two places you can look to see if your signal is decent (meaning probably that you have good connections) or not.

The Oscilloscope should show a fine single line for each signal included in it that cycles back and forth across the baseline and it should show variability–not a highly regular, mechanical-looking waveform.

The Power Spectrum should show the amplitudes (height of the bars) for all frequencies (they’re listed across the bottom of the screen in this design), and they too should be fluidly changing. The one thing you must look for is a huge spike at 60 Hz if you are in a country that has a 60 Hz electrical system like the US or at 50Hz if you are in a country with a 50 Hz signal.

If you start a minute of recording and immediately see it is bad, click Pause. Fix the electrodes then start again. It’s easy enough to remove bad signal if it does not continue beyond a few seconds. The assessment requires a minimum of 50% good data.

I always ask clients to let their mouths hang open during the measurements, so they cannot tense the jaw muscles.

Sync Errors

Sync errors are shown when something interferes momentarily between an amplifier and the computer or the amplifier and receiver/dongle. They are more common in wireless amps. Often it means someone put an arm or other body part between the two units. They should be able to “see” each other at all times for ideal signal processing. A brief blink on of the Sync Error notification is not a problem. If it stays on, training data may be lost.

TQ – The Trainer’s Q

QEEG just means quantitative EEG. That involves digital (rather than analog) recording of the EEG from 18-128 sites, usually at least with eyes closed and eyes open, though often also at task. Data should have artifacts removed (signals that appear in the EEG but do not originate in the brain, like eyeblinks, electrical interference, etc.) The data are then presented in various views that allow the brain to be described in terms of frequency, amplitude/magnitude (power), variability and connectivity–the primary measures of brain function. In most cases the data are recorded using an electrocap, though individual electrodes can be used. The recording should be completed within 20-30 minutes so that brain activation is consistent throughout.

There are amplifiers with 19, 24 and more channels that allow all data to be recorded at once, and other systems that use amplifiers with 2 or 4 channels that gather it serially with minimal delay.

The QEEG was essentially developed as a research tool, and the design of what most people think of as a Q is based on that approach. Q’s have been used by a number of researchers over the past decade or two to compare sub-groups of the population with specific problems against the population as a whole to generate pattern analyses that define ways in which people in the sub-group reliably differ from the population as a whole (e.g. how do the brains of anxious people tend to differ?)

The TQ8 uses a cap and gathers eyes-closed, eyes-open and task data from the standard 20 EEG sites in about 20 minutes 4 channels at a time. It removes artifact from the data and presents standard maps, graphs and tables of brain EEG data in amplitude/frequency, symmetry, synchrony/connectivity, and variability. That’s a quantitative EEG.

The differentiation is in how the data are used. There are “population-based” analyses, which compare the individual brain against a database of people and use z-score analysis to identify all the measures in which the individual differs by a certain number of standard deviations from the average of the population. For research this is perhaps helpful (though it is more likely to be used–because of the greater statistical validity–to compare sub-groups of the population against the population rather than one individual vs the population). From the trainer’s perspective it makes the significant assumptions that a) all deviations from the mean are negative and should be trained; and b) that clients want to be “average” in every way. From a researcher’s point of view using pattern analysis, this may be very useful. From a trainer’s it’s probably not. Z-score training doesn’t distinguish, in the words of Jay Gunkelman, between a broken leg and a crutch. Both deviate from “average.” As would the brain of Einstein, or a person with special talents in music or math or any other area.

The TQ8 is a “Trainer’s Q”. It uses the data descriptively instead of normatively. It uses a pattern-based analysis seeks to identify those patterns which have been defined in QEEG research and empirically as relating to specific types of problems. The Population Q tends to gather data at a level of detail that is not generally very useful to trainers. For example, we know that alpha or theta can be divided into “slow” and “fast” bands (4-6, 6-8, 8-10 and 10-12 Hz). These relate to specific differences in perception and performance. However, outside of research, there’s no real benefit to looking at 4, 5, 6, 7, 8, etc Hz bands as the population Q does. So while we do have users who do research using the TQ8 (on training approaches), the QEEG is probably better fitted to that; and while there are folks who use the population Q to do training plans, I would argue that the TQ8 is better fitted for that.

Showing Clients Assessments

I don’t usually give them the assessment at all. I sit down and explain the Training Plan and the Training Objectives and that’s it. The rest is unnecessary and often confusing and frequently causes you no end of headaches when you start to get folks wanting to see how the numbers have changed (which they may or may not.) The assessment is for YOU, to guide YOU in preparing a plan to get them where they want to be. Keep their attention focused on the real-world changes and accurately reporting progress on same.

TQ vs QEEG

I agree that Jay’s definition of QEEG is pretty clear and complete. It states:

Quantitative Electroencephalography (qEEG) is a procedure that processes the recorded EEG activity from a multi-electrode recording using a computer. This multi-channel EEG data is processed with various algorithms, such as the “Fourier” classically, or in more modern applications “Wavelet” analysis. The digital data is statistically analyzed, sometimes comparing values with “normative” database reference values. The processed EEG is commonly converted into color maps of brain functioning called “Brain maps”.

Various analytic approaches exist, from commercial databases to database free approaches, such as EEG phenotype analysis

The TQ8 processes multi-electrode recording using the fourier transform and converted into color maps and tables of brain functioning. Jay mentions phenotypes as an alternative to commercial databases, and that is exactly what the TQ8 uses. In fact I corresponded with him over a decade ago, comparing his phenotypes with the TQ patterns, and we found they were almost exactly alike, though his do not give weight to the temporals, as the TQ does, and we don’t do a visual analysis of the raw EEG as he does.

Since our focus is on trainers, the TQ8 deals with what we find to be the most important issues to them: how all that analysis translates into actual training recommendations and how much of the increasingly limited resources of many real-world clients must be spent before beginning training. The whole-brain training plan works multiple patterns in a circuit, so we nearly always find that within the first five sessions one or more of these have had a positive response, reinforcing the client’s commitment to training. Also, since whole-brain training undoes the brain’s linked activation system from multiple points of view, it often produces a new stability within 2, 3 or 4 cycles. And it’s very hard to beat the cost–30 minutes of time with the same $4000 system that is used in training itself!

The population-based Q is essentially a research tool that gathers massive amounts of data about the brain and compares it against a normative database of people who were determined to have “normal” brains. If you understand statistical processing, you’ll understand that these readings were used to create a set of means and standard deviations for each age-group/sex combination, and these are used to produce what, for most people, is the most useful part of a QEEG report: the z-scores. These measure how many standard deviations each of the thousands of measures is from the mean for the comparison group.

Of course, it’s highly doubtful that ANY of the “normal” brains actually had low z-scores in all measures, so even “normal” brains are “abnormal” in some or many of the measures. Just looking at the z-scores, as some “readers” do, isn’t particularly helpful, since you need to know a good deal about brain function and expected patterns to be able to determine which, if any, of the z-score measures would actually be related to the real-world changes the client is seeking. You also need to be able to determine the difference between a “broken leg” and a “crutch.” One is a problem; the other is an adaptation to the problem, but both will appear as high z-scores. Training to get rid of the crutch may not be very helpful. Finally, you need to be comfortable with the whole idea of a “normal” brain. I like to ask people, “Do you really believe that a poet and an accountant will have the same brain?” If not, which one is normal? Norming is a useful concept in medicine and engineering that psychology has tried to apply to a field where it may make a lot less sense. Unlike, say, values for fasting blood sugar or other medical norms, there are multiple QEEG databases, and they don’t all agree on what is “normal”, so it’s important to know which one was used.

The TQ pattern-based QEEG, which you yourself could gather using the hardware and software (BT2) you will use to train, is not normed. It is descriptive. It allows us to say whether a brain is fast or slow or fast in front and slow in back, etc. More importantly, it takes the results of many research studies done using QEEG to determining pattern analysis of various symptom constellations. You schedule an online meeting with someone from brain-trainer and go through what your TQ shows, what your training goals are, and why you are testing specific interventions.

When a person is highly anxious, for example, there are a number of EEG patterns which are very likely to appear in their brains that won’t appear in the brains of people who are not anxious. The brain-trainer system (the assessment and design package) is designed to guide you to protocols and sites that are likely to result in positive responses relative to the client’s desired changes. You continue training until it stabilizes.

If you purchased a Q, it would be fairly rare that it would not have come with some kind of reading and set of recommendations–often provided by someone other than the person who gathered the Q. Jay Gunkelman (qeegsupport.com) is probably among the most highly respected readers of Q’s. If I had to have a Q and have someone interpret it, I’d probably go to him, though there are many others in the field who are good as well.